Web scraping is a powerful technique for extracting data from websites, and Selenium is one of the most effective tools for this purpose. Designed primarily for browser automation, Selenium shines when dealing with dynamic web pages that load content via JavaScript. In this blog, we’ll explore how to set up Selenium web scraping, provide practical examples, and offer best practices for using Selenium effectively.

What is Web Scraping?

Web scraping allows for the automation of data extraction from websites, making it invaluable for tasks like data analysis, market research, and content aggregation. While some websites provide APIs for easy data access, the web scraping process gives you a flexible way to retrieve data when APIs aren’t available or are limited in scope.

You might use web scraping to collect product listings from e-commerce sites, gather news articles for research, or analyze trends across social media platforms.

Why Use Selenium for Web Scraping?

If you’re scraping websites that load content dynamically using JavaScript, Selenium is a powerful tool to consider. Unlike static web pages, which can be scraped with simpler libraries like BeautifulSoup and Requests, dynamic websites require JavaScript execution to fully load their content. Selenium is built for this challenge, as it not only handles JavaScript but also simulates user interactions, making it versatile and ideal for scraping modern, JavaScript-heavy sites.

Selenium vs Traditional Scraping Libraries

Traditional libraries like BeautifulSoup and Requests are faster and more lightweight, making them a better choice for static pages that don’t require JavaScript to load content. However, if you’re working with interactive elements or content that only loads after user actions, Selenium is unmatched in its ability to simulate these interactions and retrieve the data you need.

When deciding on a scraping tool, it’s helpful to understand the differences between Selenium and traditional scraping libraries like BeautifulSoup and Requests. Here’s how they are different:

| Feature | Selenium | BeautifulSoup + Requests |

| JavaScript Handling | Yes | No |

| Browser Simulation | Yes, operates a real browser | No, doesn’t simulate a browser |

| Speed | Slower (runs a full browser) | Faster (no browser overhead) |

| Best Use Cases | Dynamic pages with JavaScript | Static pages with static content |

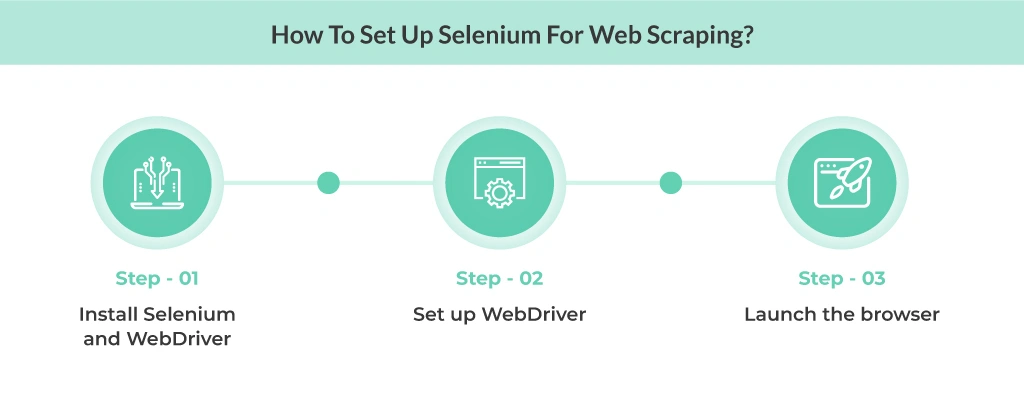

Setting Up Selenium for Web Scraping

To start web scraping with Selenium, you’ll first need to install and set up Selenium with a supported programming language like Python, Java, C#, or JavaScript. Here’s a quick guide to getting everything you need to run Selenium for web scraping.

Installing Selenium and WebDriver

If you’re using Python, you can install Selenium with pip, the Python package manager:

pip install selenium

Once Selenium is installed, you’ll need a WebDriver. A WebDriver is what Selenium uses to control a browser for automation. Choose the appropriate WebDriver for the browser you’ll be using:

- Chrome: Download ChromeDriver

- Firefox: Download GeckoDriver

Make sure to save the WebDriver executable to a location on your system and note its path.

Setting Up WebDriver

With the WebDriver downloaded, you can set up Selenium to work with it. Here’s an example of initializing ChromeDriver in Python:

from selenium import webdriver

# Replace ‘/path/to/chromedriver’ with the actual path to your ChromeDriver

driver = webdriver.Chrome(executable_path=’/path/to/chromedriver’)

Launching the Browser

After setting up the WebDriver, you’re ready to open a browser and navigate to a webpage. Here’s how to launch the browser and open a specific URL:

driver.get(“https://example.com”)

This command opens the specified URL in the browser controlled by Selenium, allowing you to begin interacting with the page and retrieving data for your scraping tasks.

Finding and Locating Elements on a Web Page

Once you’ve set up Selenium and launched a browser, the next step in web scraping is locating the specific elements you want to extract data from. Selenium offers multiple strategies for finding elements on a webpage, including IDs, class names, CSS selectors, and XPath.

Locating Elements by ID, Class, and XPath

Here are some examples of how to use different locator strategies to identify elements on a page:

- By ID: If the element has a unique ID, you can locate it using find_element_by_id.

element = driver.find_element_by_id(“example-id”)

- By Class Name: You can also locate elements by their class name with find_element_by_class_name.

element = driver.find_element_by_class_name(“example-class”)

- By XPath: XPath allows for flexible navigation through a document’s structure, making it a powerful choice for complex element searches.

element = driver.find_element_by_xpath(“//tag[@attribute=’value’]”)

Extracting Text and Attributes

Once you’ve located an element, the next step is to retrieve its text or any attributes you need. Extracting text and attributes is essential for collecting data from the page.

- Extracting Text: To get the visible text of an element, use the .text property.

text = element.text

- Extracting Attributes: To get a specific attribute, such as an image URL or a link, use .get_attribute.

attribute_value = element.get_attribute(“attribute_name”)

Navigating Dynamic Web Pages

Many modern websites load content dynamically with JavaScript, which can make it challenging to scrape data using traditional methods. Selenium is particularly effective for handling these scenarios, as it can simulate user interactions and wait for content to load, giving you access to the data that appears dynamically.

Handling Infinite Scrolling

Infinite scrolling is a common feature on websites, where new content loads as you scroll down the page. To scrape this type of content, you can use Selenium to scroll the page programmatically, triggering the loading of additional items. Here’s how you can implement infinite scrolling in Selenium:

import time

# Scroll down until the end of the page

last_height = driver.execute_script(“return document.body.scrollHeight”)

while True:

# Scroll to the bottom

driver.execute_script(“window.scrollTo(0, document.body.scrollHeight);”)

# Wait for new content to load

time.sleep(2) # Adjust the sleep duration based on the page loading speed

# Calculate new scroll height and compare with last scroll height

new_height = driver.execute_script(“return document.body.scrollHeight”)

if new_height == last_height:

break

last_height = new_height

This code will keep scrolling to the bottom until no more new content appears. The time.sleep() delay ensures that content has time to load before the next scroll action.

Handling Infinite Scrolling with Asynchronous Script Execution

When scraping a page with infinite scrolling, you might encounter elements that load slowly due to asynchronous API calls. In such cases, using asyncio to handle delays or other asynchronous tasks while scrolling can improve the overall performance and make the script more responsive. You can combine Selenium for the scrolling action and asyncio to wait for elements or API responses to load before performing further actions.

import time

import asyncio

from selenium import webdriver

from selenium.webdriver.common.by import By

# Initialize the WebDriver

driver = webdriver.Chrome()

# Open the page with infinite scroll

driver.get(“https://example.com/infinite-scroll”)

# Simple function to wait for content to load (simulating slow API calls or elements)

async def wait_for_content_to_load():

print(“Waiting for new content to load…”)

await asyncio.sleep(2) # Simulate waiting time for content to load (can adjust as needed)

# Function to handle scrolling

async def handle_infinite_scrolling(driver):

last_height = driver.execute_script(“return document.body.scrollHeight”)

while True:

driver.execute_script(“window.scrollTo(0, document.body.scrollHeight);”)

# Wait for the new content to load asynchronously

await wait_for_content_to_load()

# Check if the page height has changed to know if new content is loaded

new_height = driver.execute_script(“return document.body.scrollHeight”)

# Break the loop if no new content is loaded

if new_height == last_height:

break

last_height = new_height

print(“Reached the end of the page.”)

# Start the asynchronous scrolling

async def main():

await handle_infinite_scrolling(driver)

# Run the async event loop

if __name__ == “__main__”:

asyncio.run(main())

driver.quit()

Dealing with AJAX Content

Many websites use AJAX (Asynchronous JavaScript and XML) to load data, meaning that elements may appear on the page after the initial load. To handle this, Selenium provides WebDriverWait, which lets you wait for specific elements to load fully before attempting to interact with or scrape them.

Here’s how you can wait for AJAX-loaded content:

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

# Wait until a specific element is present

element = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.ID, “dynamic-element”))

)

With WebDriverWait, you ensure that the content you need is fully loaded before interacting with it, making it possible to scrape even the most complex AJAX-based pages effectively.

Automating Form Submissions and User Interactions

Selenium shines in situations where you need to interact with web elements like forms, buttons, and dropdowns, allowing you to automate navigation through pages that require user input or actions. This is especially useful for scraping data that is hidden behind login forms or interactive search pages.

Filling and Submitting Forms

If you need to scrape data from a site that requires filling in a form, such as a login page or a search form, Selenium makes it easy to automate these steps. Here’s how you can locate input fields, enter text, and submit a form:

# Locate the username and password fields and enter text

username_field = driver.find_element_by_id(“username”)

password_field = driver.find_element_by_id(“password”)

username_field.send_keys(“your_username”)

password_field.send_keys(“your_password”)

# Submit the form by locating the submit button and clicking it

submit_button = driver.find_element_by_id(“submit-button”)

submit_button.click()

This approach enables you to bypass login pages and other forms that require user input, which is essential for accessing gated content on a website.

Handling Buttons and Links

In addition to form submissions, you may need to click buttons or follow links to navigate to the pages where your target data resides. Selenium allows you to simulate these interactions seamlessly.

# Locate a button or link by its ID, class, or other attribute, and click it

button = driver.find_element_by_id(“next-page-button”)

button.click()

# Alternatively, you can navigate through links

link = driver.find_element_by_link_text(“View Details”)

link.click()

By automating clicks and link navigation, you can reach deeper pages in a website’s structure, allowing you to scrape the data hidden within interactive elements. With these techniques, you can fully automate browsing, making it easy to scrape data that would typically require multiple user actions to access.

Example: Scraping an E-commerce Website

Let’s walk through a practical example of using Selenium to scrape product information, such as names, prices, and ratings, from an e-commerce website. This step-by-step guide will demonstrate how to extract multiple data points and store them in a structured format like CSV for easy analysis.

Navigating to the Target Page

The first step is to navigate to the product listing page of the website and ensure it’s fully loaded. For dynamic sites, this might involve waiting for specific elements to load before continuing.

# Navigate to the product listing page

driver.get(“https://example-ecommerce.com/products”)

# Wait until the products container is loaded

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CLASS_NAME, “product-container”))

)

Using WebDriverWait ensures that all products on the page have fully loaded before you start scraping.

Extracting Product Information

Now, let’s locate and extract the key details for each product. We’ll look for elements that contain product names, prices, and ratings using appropriate selectors, such as XPath or CSS.

# Find all product elements on the page

products = driver.find_elements_by_class_name(“product-container”)

# Loop through each product and extract information

product_data = []

for product in products:

name = product.find_element_by_class_name(“product-name”).text

price = product.find_element_by_class_name(“product-price”).text

rating = product.find_element_by_class_name(“product-rating”).text

product_data.append({“Name”: name, “Price”: price, “Rating”: rating})

Each product’s name, price, and rating are stored in a list of dictionaries, where each dictionary represents a single product with its relevant details.

Storing the Data in a CSV File

Finally, we can save the scraped data in a CSV file for further analysis. Python’s CSV library makes this easy.

import csv

# Specify the CSV file name

with open(“products.csv”, mode=”w”, newline=””) as file:

writer = csv.DictWriter(file, fieldnames=[“Name”, “Price”, “Rating”])

writer.writeheader()

writer.writerows(product_data)

This code saves your extracted product information into products.csv, where you can easily analyze or visualize it later. With this approach, you’re ready to collect structured data from e-commerce websites for your analysis or project needs.

Best Practices for Web Scraping with Selenium

When using Selenium for web scraping, following best practices can ensure that your scraping is both efficient and ethical. Here are some key guidelines to help you scrape responsibly.

Respect Robots.txt

Before scraping a website, check its robots.txt file (located at https://website.com/robots.txt). This file specifies which parts of the site are off-limits for automated access. Even though robots.txt isn’t legally binding, respecting it is an ethical practice that promotes good relations with website administrators.

Limit Request Frequency

Sending requests too frequently can overload the website’s server and may lead to your IP being blocked. To avoid this, implement delays between interactions with the page:

import time

# Add a delay after each request

time.sleep(2) # Adjust based on site load speed and traffic

Using time.sleep() between actions prevents overwhelming the site and helps ensure your scraping is less detectable and disruptive.

Handle Browser Windows Carefully

Each browser window or tab that Selenium opens consumes system resources. Make sure to close unnecessary browser instances once you’ve finished your scraping tasks:

# Close the current tab

driver.close()

# Quit the entire browser session

driver.quit()

Properly closing sessions minimizes memory use and ensures that Selenium can run smoothly, especially when working with large volumes of data or multiple websites.

Limitations of Selenium for Web Scraping

Selenium operates by controlling a full browser instance (e.g., Chrome or Firefox), which can be resource-intensive and slow compared to more lightweight scraping libraries. Each action you perform in Selenium—whether navigating to a page, clicking a button, or extracting data—requires interaction with the browser, leading to slower performance.

Additionally, running a browser instance consumes significant system resources (e.g., CPU, memory), making it less efficient for scraping large volumes of data, especially when speed is critical.

When to Use Other Tools

While Selenium excels at handling JavaScript-heavy and dynamic websites, there are situations where other tools may be more suitable. If you’re scraping static websites or need faster performance, you might consider using libraries like BeautifulSoup or Scrapy:

- BeautifulSoup: Best for scraping static web pages where content is already available in the HTML source. It’s lightweight and works well in combination with requests to retrieve and parse static HTML.

- Scrapy: A robust, high-performance web scraping framework designed for large-scale scraping projects. Scrapy can handle both static and dynamic websites, and it’s more efficient than Selenium for scraping high volumes of data.

Final Thoughts

With Selenium, you can scrape dynamic, JavaScript-heavy pages and automate user interactions, making it a powerful tool for complex web scraping projects. While it’s slower than traditional scraping methods, Selenium’s flexibility makes it the go-to option for sites that require user-like navigation.

QA Touch is an efficient test management platform that helps you with all your testing needs from a single platform. You can benefit from the various features offered by the platform like ChatGPT-Driven test case creation, in-built bug tracking, in-built timesheet, audit log and more.

Ready to elevate your testing process? So start your 14-day free trial today.