Your software development plan or marketing campaign could determine whether your next product succeeds or fails – and a straightforward way to know if your choices will be successful is A/B testing.

In short, A/B testing is a tool for optimizing your strategy and making data-informed decisions. Whether tweaking a landing page, refining an email campaign or altering a product feature, A/B testing can tell you what’s working for audiences and what’s not.

In this guide, we’ll explain what A/B testing is, how it works and how you can use it on your projects. Let’s get started by finding out what it means.

What is A/B Testing?

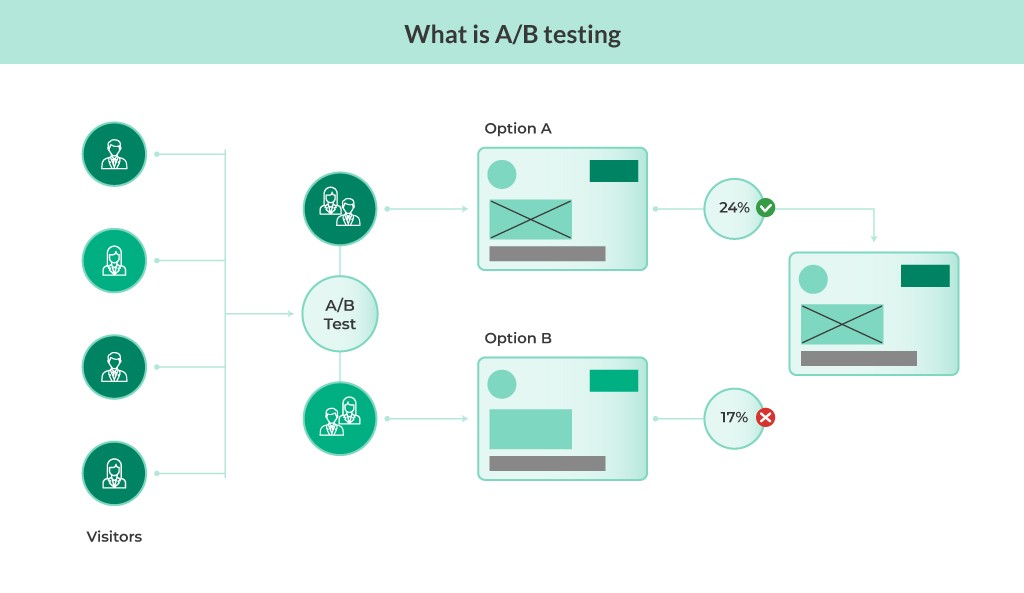

A/B testing, or split testing, is a method that compares two versions of a variable, such as a web page, email, or product feature, to determine which one performs better. The core idea is simple: you split your audience into two segments and show each segment a different version.

By measuring the performance of these versions, you can see which resonates more with your audience.

The main purpose of A/B testing is to use data-driven insights to make informed decisions. By understanding which version performs better, you can optimize for higher conversion rates, better user engagement, or other key metrics that matter to your business.

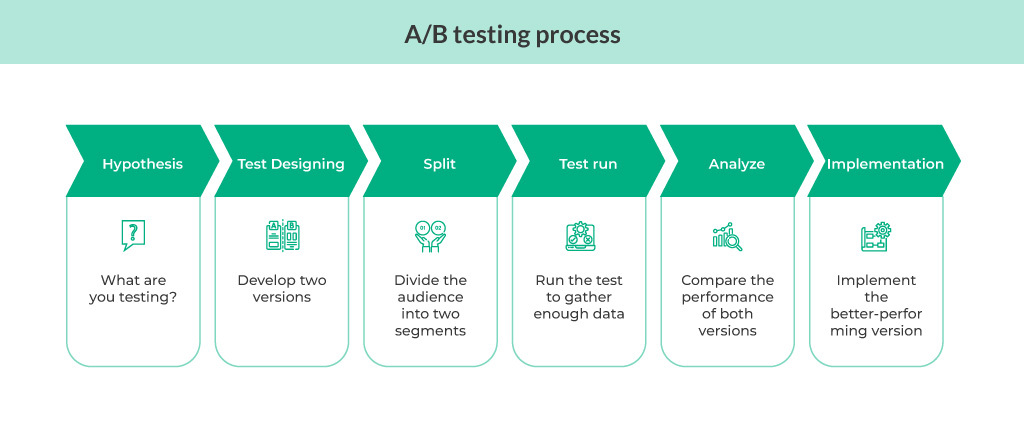

The typical process of A/B testing includes several key steps:

- Create a Hypothesis: Start by identifying what you want to test and why. For example, “I believe changing the color of the ‘Buy Now’ button will increase sales.”

- Design the Test: Develop Version A (the control) and Version B (the variant). The difference between them should be clear and focused on one specific element.

- Split the Audience: Randomly divide your sample group into two segments. Show Version A to one group and Version B to the other.

- Run the Test: Let the test run for a sufficient period to gather enough data for analysis.

- Analyze the Results: After the test, compare the performance of both versions. Determine which one more effectively achieved the desired outcome.

- Implement the Best Version: If the results are statistically significant, implement the better-performing version across your audience.

Why Should You Consider A/B Testing?

A/B testing is a powerful tool for making informed, data-driven decisions. It takes the guesswork out of the equation, allowing you to understand what truly resonates with your audience and how you can optimize your strategies for better outcomes.

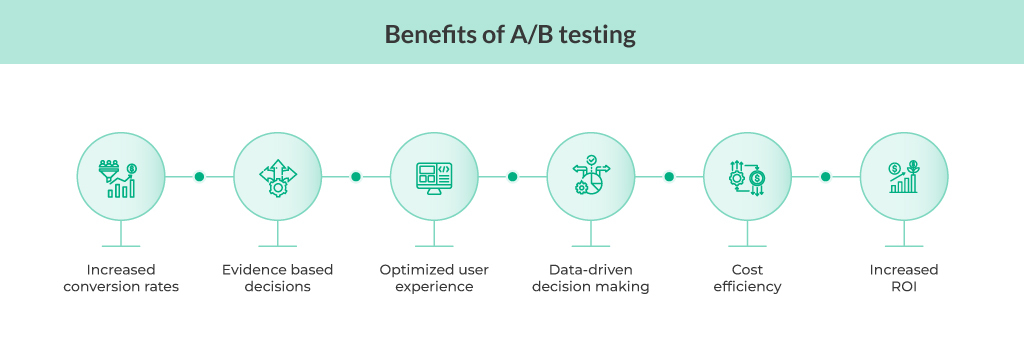

Evidence-Based Decisions

One major advantage of A/B testing is that it offers concrete data on what works and what doesn’t. Instead of depending on assumptions or gut feelings, you can decide based on user behavior.

This evidence-based approach reduces the risk of making changes that might not have the desired effect and helps ensure that your strategies are grounded in reality.

Optimization

A/B testing is a key component in the ongoing optimization process. You can refine these elements by continuously testing different versions of your web pages, emails, or product features to improve user experiences, increase engagement, and achieve better overall results.

Cost Efficiency

Making changes without testing can lead to costly mistakes in time and resources. A/B testing allows you to test ideas on a smaller scale before fully committing, ensuring that your investments are directed toward strategies that have been proven to work.

This can save money by preventing the implementation of ineffective changes and ensuring that your resources are used efficiently.

Enhance User Experience

User experience (UX) is pivotal in retaining customers and encouraging repeat interactions. A/B testing allows you to evaluate how design, content, or functionality changes impact user satisfaction.

For instance, testing different layouts or content structures can reveal which version provides a more intuitive and engaging experience. Improving UX benefits user retention and contributes to positive brand perception.

Increase ROI on Marketing Efforts

Marketing budgets are often tight, and maximizing return on investment (ROI) is essential.

A/B testing helps you refine your marketing strategies by evaluating the effectiveness of various elements such as email subject lines, ad copy, or landing page designs.

By optimizing these elements based on real data, you ensure that every dollar spent is more likely to deliver a higher return.

What Can You Test with A/B Testing?

A/B testing offers a versatile approach to improving various aspects of your projects by testing and optimizing different elements and variables.

Here are some key areas where A/B testing can make a significant impact:

1. Website Elements

- Landing Pages: Test different designs, headlines, or calls-to-action (CTAs) to determine which layout or messaging drives more conversions. For instance, compare a minimalist landing page against a more detailed one to see which performs better.

- Headlines: Experiment with different headline styles to find which ones capture attention and engage visitors. Try variations that emphasize different benefits or use different tones.

- CTA Buttons: Test variations in the color, size, text, and placement of CTA buttons to see which combination leads to higher click-through rates. For example, test a “Sign Up Now” button against a “Get Started” button to gauge which drives more user action.

- Images and Media: Evaluate how different images, videos, or other media elements affect user engagement. Compare a page with a large hero image versus one with a smaller or no image.

2. Email Marketing

- Subject Lines: Test various subject lines to see which ones achieve higher open rates. For instance, try a subject line that creates urgency versus one that offers value to determine which resonates better with your audience.

- Email Content: Experiment with different formats, lengths, or styles of email content. Compare a text-heavy email against a more visual one to determine which format leads to better engagement.

- Send Times: Test different times and days of the week to identify when your audience is most likely to open and interact with your emails. For example, compare emails sent in the morning versus those sent in the afternoon.

- Call-to-Action: Test different CTAs within your emails to see which one drives more clicks or conversions. For example, compare a CTA that directs users to a webinar registration page against one that leads to a product demo.

3. Advertising Campaigns

- Ad Copy: Test variations in your ad copy to see which messages resonate more with your target audience. For instance, compare ads with different value propositions or calls to action.

- Visuals: Experiment with different images, graphics, or videos in your ads. Test contrasting visuals to determine which captures attention and leads to better performance.

- Targeting Options: Test different audience segments or criteria to determine which groups respond better to your ads. For example, performance can be compared among different demographic groups or interests.

- Ad Formats: Compare different ad formats, such as text ads, display ads, or video ads, to see which yields higher engagement and conversion rates.

4. Product Features

- Feature Placement: Test different placements of new features or tools within your product to see where they are most effectively used. For example, compare a feature located in the sidebar versus one in the main navigation menu.

- Design and Layout: Experiment with different designs or layouts for your product’s interface. Test variations to see which design improves user experience and usability.

- Pricing Models: Test different pricing structures, such as subscription versus one-time payments, to determine which model better meets user preferences and drives sales.

5. User Experience

- Navigation: Test different navigation structures or menu layouts to see which one provides a more intuitive and user-friendly experience. For example, test a simplified menu against a more detailed one.

- Forms: Experiment with form fields, design, and length variations to optimize form submissions. For instance, compare a long-form versus a shorter, more streamlined version to see which leads to higher completion rates.

- Checkout Process: Test different elements of your process, such as the number of steps, form fields, or payment options. Identify which variations reduce cart abandonment and increase conversions.

6. Content and Copy

- Blog Titles: Test different blog post titles to see which attract more clicks and readers. For example, compare titles that use specific numbers or questions versus those that are more descriptive.

- Content Layout: Experiment with different content formats, such as listicles versus narrative posts, to see which format resonates more with your audience.

- Call-to-Action in Content: Test different CTAs within your content to see which prompts more engagement or conversions. For instance, compare a CTA that encourages a download versus one that invites users to sign up for a newsletter.

7. Customer Support

- Support Channels: Test different customer support channels, such as live chat versus email support, to see which channel leads to quicker resolutions and higher customer satisfaction.

- Response Times: Experiment with different response times for customer inquiries to identify which response time enhances customer satisfaction and reduces churn.

- Support Scripts: Test variations in support scripts or responses to determine which approach improves customer interactions and resolutions.

Types of A/B Testing

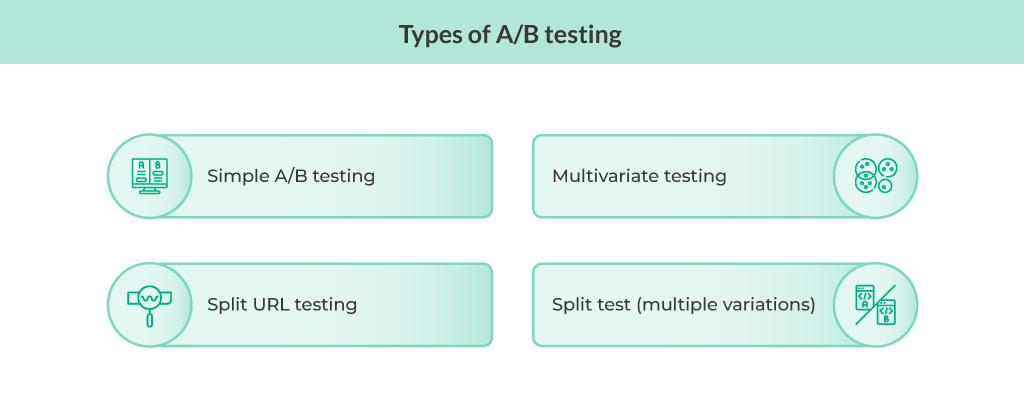

A/B testing comes in several forms, each suited to different types of experiments and objectives. Understanding these different methods can help you choose the right approach for your needs. Here’s a look at the various types of A/B testing and how they can be applied:

Simple A/B Testing

To see which performs better, the most common type of A/B testing involves comparing two versions of a single element—Version A (the control) and Version B (the variant). This method is ideal for testing a straightforward change, such as modifying a headline, button colour, or image, for seeing if it improves user engagement or conversion rates.

Split URL Testing

Split URL testing is a technique where two different page versions are hosted on separate URLs. Users are randomly directed to one of these URLs, and their interactions are tracked and compared. This type of testing helps evaluate major changes to a page, such as a complete redesign or a significant shift in layout, where hosting the variations on separate URLs is more practical.

Multivariate Testing

Unlike simple A/B testing, which focuses on a single change, multivariate testing involves testing multiple variables simultaneously. For example, you might test headlines, images, and button combinations to see which combination works best. This method helps you understand the combined effect of several changes and is particularly useful when you want to optimize multiple elements on a page simultaneously.

Split Test (Multiple Variations)

You compare more than two versions in a split test with multiple variations. This approach allows you to test several variations of an element or page against each other to identify the best performer. For instance, you might test four headlines simultaneously to see which resonates most with your audience. This method is helpful when you have multiple ideas and want to determine the best option quickly.

A/B Testing Statistical Approach

To make valid and reliable conclusions from A/B testing, it’s crucial to apply the right statistical methods. Proper analysis ensures that the differences you observe between versions are meaningful and not just due to random chance. Let’s explore the key statistical concepts involved in A/B testing:

Sample Size

One of the first steps in A/B testing is determining an appropriate sample size. The sample size affects the reliability of your results. A sample that is too small may lead to inaccurate conclusions, while a very large sample can provide more confidence in your findings.

Calculating the right sample size involves considering the expected effect size (the difference you expect to see between versions), the desired confidence level, and the power of the test. Ensuring an adequate sample size helps you achieve statistical significance and reliable results.

Statistical Significance

Statistical significance is a measure that helps you determine whether the differences observed in your A/B test are likely due to the changes you made rather than random variation. Typically, a result is considered statistically significant if the probability of it occurring by chance is less than 5% (p-value < 0.05).

Confidence Intervals

Confidence intervals provide a range of values within which the actual effect size (the real impact of the change) is likely to lie. For example, if you find that Version B increases conversions by 5%, a confidence interval might indicate that the true increase is expected between 3% and 7%.

Confidence intervals give you a sense of how precise your estimate is and help you understand the potential variability in your results. They are essential for interpreting the practical significance of your findings beyond just knowing which version is better.

A/B Testing Examples

Let’s look at some examples across different scenarios to understand how A/B testing is beneficial for businesses and organizations:

E-commerce Example

Imagine an online store testing two versions of a product page. Version A features a standard layout with product details and a buy button in the usual spot.

Version B prominently displays customer reviews and testimonials near the top of the page.

After conducting the test, the store discovered that Version B increases conversion rates by 15%.

This significant improvement allows the store to roll out Version B across all product pages, boosting sales and customer trust.

Email Marketing Example

A company wants to determine the best subject line for its email campaign to maximize open rates.

To this end, they test Version A, which uses a straightforward subject line like “Weekly Newsletter,” against Version B, which features a more creative and engaging line such as “Unlock This Week’s Exclusive Deals!”

The results show that Version B performs 10% better regarding open rates. This insight helps the company craft compelling subject lines in future campaigns, enhancing their email marketing effectiveness and engagement rates.

Landing Page Example

A startup aims to increase user sign-ups for its service through a landing page. To this end, they tested two different call-to-action (CTA) buttons: Version A with “Sign Up Now” and Version B with “Get Started Today.”

The test revealed that Version B results in a higher number of sign-ups. With this knowledge, the startup implemented Version B across all its landing pages, leading to increased user registrations and improved conversion rates.

Tips for Successful A/B Testing

Here are some of the best practices that can help you make the most out of the A/B testing:

- Test One Variable at a Time: Avoid testing multiple variables simultaneously to attribute changes in performance to a specific element accurately.

- Ensure Statistical Significance: Use a sample size calculator to determine the number of participants needed to achieve reliable results. Small sample sizes can lead to misleading conclusions.

- Monitor External Factors: Be aware of external factors that might affect the test results, such as seasonal trends, holidays, or changes in user behavior.

- Iterate and Optimize: A/B testing is an ongoing process. Use the insights gained from each test to make incremental improvements and continue testing new ideas.

- Document Everything: Keep detailed records of your tests, including hypotheses, variations, and results. This helps you analyze trends and avoid repeated mistakes.

Common A/B Testing Mistakes to Avoid

Avoiding common pitfalls is crucial to ensure that your A/B testing yields accurate and actionable results. Here are some key mistakes to watch out for and how to address them:

Insufficient Sample Size

One of the most critical mistakes in A/B testing is using an insufficient sample size. A sample that’s too small can lead to unreliable results and might not accurately represent your entire audience.

Small sample sizes increase the risk of variability and random chance affecting your outcomes, making it difficult to draw meaningful conclusions.

To avoid this, calculate the appropriate sample size needed to achieve statistical significance, considering the effect size and desired confidence level. This ensures your results are reliable and representative of your broader user base.

Ignoring Statistical Significance

Ignoring statistical significance can result in misleading conclusions. Statistical significance helps you determine whether the observed differences between versions are likely due to the changes you made rather than random variation.

If you don’t check for statistical significance (typically a p-value of less than 0.05), you might make decisions based on noise rather than genuine insights.

Always assess the significance of your results to ensure that your conclusions are robust and that the observed effects are real.

Testing Too Many Variables

Testing too many variables simultaneously can complicate your analysis and lead to inconclusive results.

When you experiment with multiple changes simultaneously—such as different headlines, images, and button colors, it’s challenging to pinpoint which specific element is driving the observed differences.

Instead, focus on testing one variable at a time or use multivariate testing to assess the impact of multiple elements. This approach helps isolate the effects of each change and provides clearer, more actionable insights.

Neglecting Proper Experiment Design

A well-structured experiment design is essential for valid and reliable A/B testing results. Neglecting proper design can lead to biased outcomes and invalid conclusions.

Ensure your experiment is carefully planned, with clear hypotheses, randomization, and consistent conditions across test groups.

Avoid biases such as seasonal effects or external influences that might skew your results. A solid experiment design ensures that your test is fair, controlled, and capable of providing actionable insights.

Final Thoughts

A/B testing is a powerful tool for optimizing strategies and improving performance across various aspects of your business, from e-commerce and email marketing to landing pages and product features. By comparing two variable versions, A/B testing provides valuable data-driven insights that can lead to more informed decisions and better results.

For QA testers, A/B testing is about running experiments and ensuring the accuracy and reliability of the results.

QA Touch is an effective test management platform that streamlines the A/B testing process. It helps you design, execute, and analyze tests precisely.

Start your 14-day free trial today.